VLBR Hosts Symposium on Venture Captial

Source: Virginia Law and Business Review

The Virginia Law & Business Review hosted its 2026 Symposium on the Future of Emerging Companies and Venture Capital (EC/VC) in Corporate Law on Friday, February 6. Professor George Geis presented the first talk on current market terms in VC investing.

The second event was a panel hosted by Professor Rob Masri ’96 and featuring attorneys Kevin Clayton (Col. ’92, Law ’98), John Lynch, and Steve Kaplan (Col. ’02, Law ’05), from Hogan Lovells, Wilson Sonsini, and Pillsbury, respectively. The panelists spoke on trends in the VC climate. Professor Masri noted a fairly hot market especially at the early stage, including some “mega deals.” Relative to years ago, panelists noted that capital formation is longer with many more financing rounds, which influences negotiation strategy. Concessions made early on stick around in future rounds, but founders can also use predictions about later rounds as leverage in earlier ones.

A longer term trend is that regional differences between the east and west coasts are less salient than in the past, although there are sometimes nuances in regions, such as the South, with relatively fewer participants in the market. Different and more onerous regulatory regimes are a major concern for international investors.

The panelists also discussed rewarding aspects of the work. Whereas clients in many areas may view lawyers as roadblocks, founders are eager to seek out attorneys who can connect them to investors. Along the way, attorneys have opportunities to provide legal services as well as more business-oriented advice and are often trusted advisors for startups with limited staff. One of the panelists emphasized the satisfaction that comes from seeing a client grow from a software engineer in a garage to a mature entrepreneur.

The attorneys also provided differing perspectives on the impact of artificial intelligence (AI) on legal work. Although one panelist said it was lightly used at his firm outside of litigation, the others said they used it substantially. Attorneys emphasized that it saves significant time on document review and drafting. They also noted, however, that new technology has influenced legal practice for decades; AI has had a major impact, but it extends this trend rather than creating a paradigm shift. Still, they agreed that training new talent will be a challenge as tasks traditionally done by junior lawyers become automated.

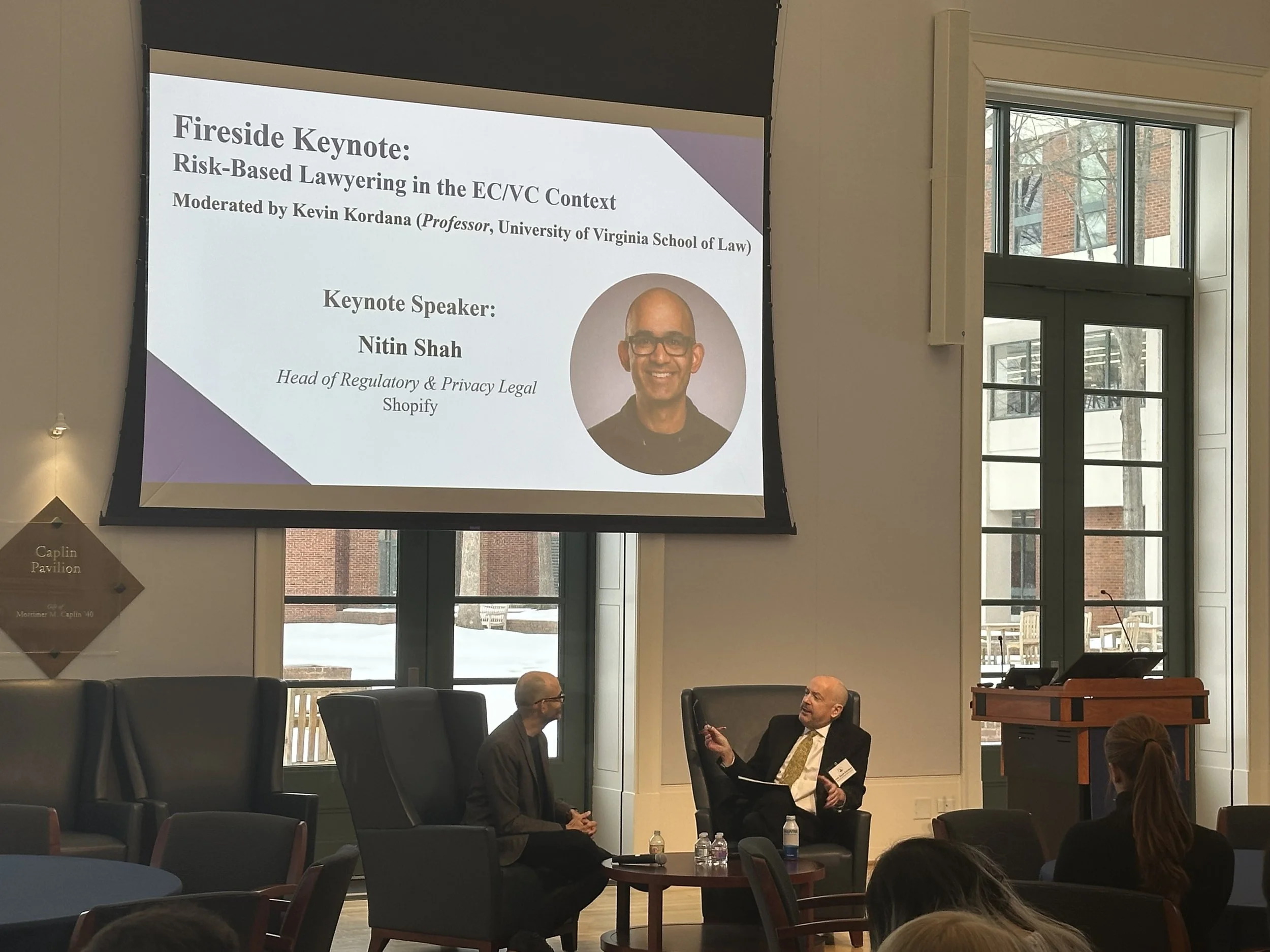

After lunch, the symposium concluded with a discussion between Professor Kevin Kordana and Nitin Shah ’09 on risk-based lawyering in the EC/VC context. Shah worked extensively in the federal government before becoming associate general counsel at Shopify, where he is head of the regulatory and privacy legal teams.

Shah contrasted the ways that government and firms view regulation. Whereas regulators tend to see things as an on-off switch—actions are either permitted or forbidden—firms view regulatory actions as potential costs to be considered in decision-making. The government has very limited resources available to enforce regulations. Counsel must advise firms on the odds and potential magnitude of enforcement actions that could occur under different business strategies, so that firms can shape their behavior to maximize profit, rather than to avoid regulatory risks altogether. The exceptions are when an industry is in the government’s crosshairs, as when the Biden administration went after crypto, and when an action could seriously damage the firm’s reputation. As Shah said, attorneys need to understand managers’ mindsets to maintain credibility for those occasions when they must categorically advise against a decision.

Shah and Professor Kordana also discussed the regulatory risks around AI, particularly as agentic AI, which operates autonomously with limited oversight, comes into greater use. They discussed the extent to which traditional doctrines such as respondeat superior can cope with these risks. Shah connected the politics of regulation to the market structure. Analysts often divide the market into large developers who produce the underlying AI models, smaller deployers who train specific applications, and end users. This past week suggests that the market has concluded that a few larger developers will drive the future of AI. The risk is that these developers will further entrench themselves through favorable regulation. In principle, liability should be put on parties who can cheaply monitor AI applications and who have the ability to pay damages. But powerful developers and end users, who have greater access to legislators, may convince them to put the regulatory burden on deployers, thereby creating a larger moat around themselves and limiting competition. Shah observed that developers have a powerful claim that the US government must support AI development to keep ahead of China. Professor Kordana noted how quickly discussions of regulation shift from policy issues to public choice theory.

Clear takeaways include the impact of AI, regulation, and the need to understand both the law and business needs. Students doubtless appreciated the perspective brought by practicing lawyers.